The UK’s approach to AI regulation shows a “complete misunderstanding” and could pose multiple threats to security, an AI pioneer warned.

Professor Stuart Russell said the government’s refusal to regulate artificial intelligence with tough legislation was a mistake – increasing the risk of fraud, disinformation and bioterrorism. It comes as Britain continues to resist creating a tougher regulatory regime due to fears legislation could slow growth – in stark contrast to the EU, US and China.

“There is a mantra of ‘regulation stifles innovation’ that companies have been whispering in the ear of ministers for decades,” Prof Russell told The Independent. “It’s a misunderstanding. It’s not true.”

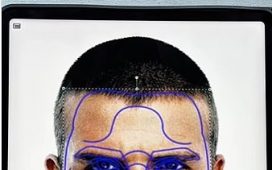

Professor Stuart Russell said the UK’s refusal to introduce tough AI regulation was a misunderstanding

(Creative Commons)

“Regulated industry that provides safe and beneficial products and services – like aviation – promotes long-term innovation and growth,” he added.

The scientist has previously called for a “kill switch” – code written to detect if the technology is being ill-used – to be built into the software to save humanity from disaster.

Last year, the British-born expert who is now a professor of computer science at the University of California, Berkeley, said a global treaty to regulate AI was needed before software progresses to the point where it can no longer be controlled. He warned language learning models and deepfake technology could be used for fraud, disinformation and bioterrorism if left unchecked.

Despite the UK convening a global AI summit last year, Rishi Sunak’s government said it would refrain from creating specific AI legislation in the short term in favour of a light-touch regime.

The government is set to publish a series of tests that need to be met to pass new laws on artificial intelligence, reports suggest.

Rishi Sunak’s government said it would refrain from creating specific AI legislation in the short term in favour of a light-touch regime

(EPA)

Ministers will publish criteria in the coming weeks on the circumstances in which they would enact curbs on powerful AI models created by leading companies such as OpenAI and Google, according to the Financial Times.

The UK’s cautious approach to regulating the sector contrasts with moves around the world. The EU has agreed a wide-ranging AI Act that creates strict new obligations for leading AI companies making high-risk technologies.

By contrast, US President Joe Biden has issued an executive order to compel AI companies to reveal they are tackling threats to national security and consumer privacy. China has also provided detailed guidance on the development of AI emphasising the need to control content.